Artificial Intelligence (AI) has become a transformative technology that promises advancements in a variety of fields, from healthcare to finance and beyond. However, the rapidly growing interest in integrating AI systems into sometimes critical processes brings with it a major challenge: accountability.

This policy brief presents a framework to help organizations adopt and strengthen AI accountability practices. Our proposed organizational model draws on best practices and research to provide clear and practical guidance.

We aim to advance real-world accountability efforts related to AI and understand how our work can further assist current initiatives. To further adapt our research to practical needs, join in the CONSULTATION to provide feedback on the framework’s quality, usefulness and effectiveness.

The Problem – Defining accountabilities remains a challenge when it comes to AI

As a fundamental principle, accountability entails taking responsibility for actions and providing satisfactory justifications [1]. However, in the realm of AI, establishing accountability becomes complicated due to the distinctive attributes of AI systems. AI systems work with complex algorithms, large data sets and self-learning capabilities, which makes it more difficult to decipher the reasons for AI-generated outcomes compared to human decisions. Given this lack of interpretability and opacity and the prevailing view that AI cannot be held directly accountable for its actions, providers and users of AI systems are left in a precarious position. This helps explain why the practical implementation of AI accountability is still unresolved – the attributes that make AI powerful also challenge traditional notions of responsibility.

Particularly three fundamental problems why accountability for AI remains a challenge are frequently highlighted by practitioners. First, there needs to be more clarity and consensus around what kind of accountability is required in specific operational contexts [2]. Indeed, while some initial frameworks for AI accountability have been proposed, there still needs to be standardized, cross-industry perspectives and guidelines that can be pragmatically implemented [3]. This lack has led to a vicious circle in which the lack of real-world case studies perpetuates the ambiguity. Second, the underlying obstacles preventing accountability initiatives – such as limited understanding of the concept in the AI field, budget constraints, misaligned incentives, and regulatory uncertainties – are complex and interrelated. They hinder each other in defining appropriate accountability measures. Third, and perhaps most concerningly, the current lack of standardized best practices and oversight has led to a reluctant response from many organizations. Without clear and unified guidance, some have adopted a cautious wait-and-see stance that postpones urgently needed work.

Some work has been initiated on the regulatory side. The proposed EU AI Act [4] sets clear requirements where deemed needed and guides risk assessments throughout a system’s life cycle, also calling for the definition of accountabilities. Similarly, the US Blueprint for an AI Bill of Rights sets out broad principles to adhere to in the development, use, and deployment of AI, however not enforceable [5]. These initiatives reflect increasing efforts to clearly guide responsible AI behavior and clarify related obligations to address obstacles and urgent needs related to AI governance [6]. Accountability is thereby usually integrated as a fundamental prerequisite, but the concrete requirements and implementation of demanded accountability frameworks are left to other actors.

Proposing a Practical Organizational Accountability Framework for AI Systems

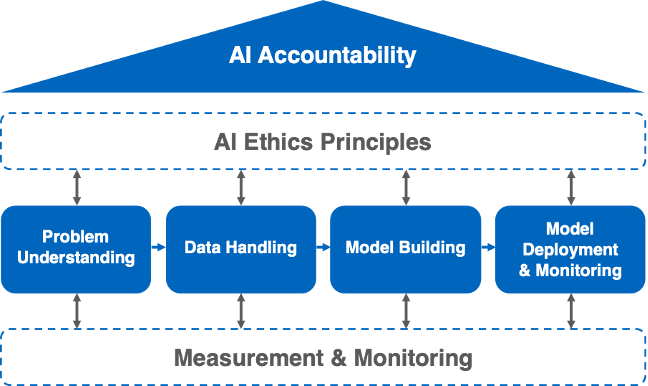

Our framework proposes a solution to the definition of accountabilities by embedding AI ethics requirements at each step of the AI development lifecycle. Accountability is understood as taking responsibility and providing justification for one’s actions. Therefore, it is implemented in a risk-based manner, identifying risks for AI ethical principles at each step and ensuring prevention or mitigation measures. The core idea is to break ethical obligations down into more concrete actions for which responsibilities and, thus, accountabilities can be defined more clearly. This process is accompanied by ongoing measurement and monitoring to allow for faster reaction in case of potentially identified harm.

AI Accountability – Reaching Accountability by Deriving Concrete Obligations

Determining accountability in practice remains an open challenge, as acknowledged by international bodies like UNESCO and the EU's High-Level Expert Group on AI [7, 8]. As the EU recognizes, ensuring AI systems are fair, aligned with ethical values, and have suitable governance is vital as these technologies increasingly impact many lives [9]. The OECD definition further specifies that accountability requires due attention throughout an AI system's lifecycle by all relevant actors [10].

To account for these challenges, regulatory efforts are undertaken worldwide (e.g., in the EU [4], Brazil [11], or Japan [12]). While legal frameworks are inevitable in the discussion to ultimately clarify and unify the current diversity of AI governance streams, their provisions can always only guide practical implementations. It is the legislator’s responsibility to set the clear underlying principles and potential red flags, however, naturally, they lack in low-level accessibility. For example, refinements have been demanded on the workability of the EU AI Act proposal [13].

Detailed policy recommendations and standards are a reliable source of more detailed guidance for the regulatory provisions, and they are currently under development for ethically aligned AI (e.g., in the EU [8], or Australia [14]). Standards, like the IEEE’s Ethically Aligned Design framework [15] detail the requirements to reach responsible AI governance and present accountability as an integral part. As they are largely in development, they currently often do not provide clear step-by-step guidance, but general recommendations for moving towards ethical and responsible AI design, development, or deployment.

With our framework, we want to contribute to the definition of accountability for AI systems and the demanded concretization of accountability approaches. Particularly, from an organizational perspective, and based on the theoretical definition of accountability linked to taking responsibility and providing appropriate justification, we derive the need to clarify concrete actions – i.e., fulfilling the responsibility of ethical behavior – and assign roles along the AI development lifecycle, which allows for oversight and thus a basis for justification. Our framework therefore aims to break down the obligations arising from the agreed principles of AI ethics into more concrete actions and define measures that allow responsibilities to be assigned on this basis.

AI Ethics Principles – Ensuring Responsible Behavior through a Risk-Based Approach

In our pursuit of establishing a comprehensive and practical framework for AI accountability, we approach accountability as a set of responsibilities that result from the need to adhere to the ethical principles of AI. These responsibilities or obligations are addressed through a risk management approach, which lays the foundation for defining stakeholders' roles and responsibilities in risk prevention and resolution processes. For effective implementation of ethical principles throughout the AI development cycle, we have identified five key requirements for AI risk management [16] on which our framework is based.

First, achieving balance is of paramount importance, more specifically, balance between specialized and generalized risk management processes. To be effective, risk management processes need to be adaptable across sectors while acknowledging the unique organizational contexts in which specific AI systems are operated. Such a balance allows for comprehensive frameworks that address sector-specific risks while, at the same time, aligning with common risk management principles.

Second, the extendibility of risk management approaches is crucial in order to ensure operability even in the event of newly emerging risks or circumstances. Given the rapid evolution of the AI landscape, risk management systems must be designed to easily adapt to evolving risks, use cases and regulatory environments to allow organizations to proactively address them and remain compliant.

Third, it is important to solicit representative feedback from diverse stakeholders, including experts, practitioners, users, and affected communities. The inclusion of these different perspectives allows for a more holistic understanding of the risks that arise and the development of risk management strategies that serve the interests of all stakeholders.

Fourth, transparency is a cornerstone of effective risk governance. The tools employed must be designed to be easily understood by both experts and non-experts in order to provide clarity and enable effective intervention when needed. Promoting transparency enables stakeholders to actively participate in risk governance and decision-making processes.

Finally, a long-term orientation is essential for successful and sustainable risk mitigation. This can be achieved through continuous monitoring and updating to identify and prevent unforeseen or evolving risks over time. By adopting a forward-looking perspective, organizations can proactively address emerging challenges and ensure the ongoing trustworthiness of their AI systems.

In conclusion, as the landscape of AI technologies evolves, the need for practical methodologies and frameworks to ensure accountability for the risks of AI systems becomes increasingly clear. Risk management is one way of ensuring the implementation of ethical AI principles along the AI development lifecycle. The outlined requirements for effective risk management shall be the basis for our trustworthy development process for AI systems, detailing the concretized measures for breaking down obligations into actionable and assignable tasks.

A Trustworthy Development Process for AI Systems

While defining accountabilities, i.e., concrete responsibilities and the ability to justify related actions, is difficult at the level of AI ethics principles, it becomes more feasible when the principles are broken down into concrete steps for risk mitigation. Therefore, this framework's heart is a development process describing the actions required for responsible or trustworthy AI systems. An emerging consensus has been found in conceptual and practice-oriented literature around the measures that can be implemented during system development to prevent or reduce risks during system operation.

Measures are required at two levels. Activities related to strategic decision-making and giving guidance on the ethical development of AI systems from a general perspective must be defined broadly on an organizational level. Such measures include, e.g., the development of an organizational AI governance strategy or the creation of codes of conduct. Further, if internal or external validation of ethical behavior is required, a strategy or entity can be defined for the organization as a whole. Guidance on supporting education on AI practices inside or outside an organization can be laid down independent of concrete projects.

In contrast, specific risk management and mitigation measures highly depend on the particular use case or context and, therefore, are defined on a project level. These measures can be structured along the AI development lifecycle and arise from four activity categories: (1) planning, (2) assessment & ensuring, (3) creation, and (4) communication.

More specifically, planning activities are required to define project objectives and align them with the strategic company or project goals. For example, they include measures to specify responsibilities or determine system requirements or thresholds. Mechanisms for assessing compliance with certain obligations of AI providers and taking appropriate action where needed include, for example, standardized pre- and post-development impact assessments (incl. technical testing) on specific system properties and impacts, as well as assessment of system dependencies, the legality of data processing and development, team properties and capabilities, options for audits (incl. seals of approval and certifications), truthfulness and ensuring remuneration. Creation activities relate to obligations that require or impact the system's active (re-)design or organizational processes, such as participatory development or public intervention mechanisms. Lastly, communication activities are linked to the disclosure of certain information, communication of system definitions, purpose, limitations, risks, and use, as well as education of staff, users, and the general public.

Such a process for the trustworthy development of AI systems outlines the measures required to mitigate and manage risks and, therefore, clarify the responsibilities that arise with the endeavor to build trustworthy AI. Transparently following and communicating such a process can help justify measures and thus significantly contribute to the definition of accountabilities. Finally, they also indicate the responsible actors or roles, depending on their stage in the development life cycle and the related development activities with which they are associated.

Measuring & Monitoring

To ensure proper realization and true impact, measures to increase accountability should be accompanied by ongoing measurement and monitoring. Here, it is particularly important to consider how the measures impact the ultimate goal of embedding ethical principles in the AI system. Therefore, instead of monitoring the individual actions taken, their overall effect can be observed by continuously measuring the resulting system’s ethicality by assessing their adherence to ethical principles. To do so, ethical principles must be quantified to be measurable and scalable to observe their alteration.

In our framework, we propose a method to quantify and hence be able to measure variations in the realization of ethical principles in the definition of criteria consisting of tangible, scalable characteristics and an associated target value. Characteristics are those system or related process properties that reflect the current state of a system's adherence to a given ethical principle. For example, when measuring an AI system’s transparency, the ratio of user inquiries out of all user requests related to issues of understandability could be determined as a suitable characteristic. They should be tangibly scalable to allow the assignment of a numeric value. These values are the scores that relate to an identified characteristic and quantify the AI system’s status regarding ethical principle compliance. A scale to measure an optimal state shall be defined and assigned to each characteristic.

For example, in the case of measuring an AI system’s state regarding transparency by assessing the ratio of user inquiries that relate to understandability, a value of only 10% might be considered acceptable. In comparison, a value of 60% could reflect a critical threshold indicating that the system is not regarded as transparent enough. Continuously comparing the determined relevant system properties against the developed scale allows us to measure compliance with the targeted ethical principle implementation.

Both characteristics and values must be determined based on the specific use case, system, and context to identify relevant system requirements and thresholds. It is, therefore, during model building at the latest that such a measurement should be initiated. Regular comparison and alignment before and during development as well as during deployment, use, or reiteration of the system allows monitoring of the system’s ability to adhere to the defined ethical principles and indicates red flags, thus, calls for action.

Conclusion

The difficulty of defining accountabilities for the outcomes of AI systems has frequently been highlighted as a severe one. The lack of clarity around actions for AI providers required for solving this problem, together with the lack of best practices and therefore an often-observed wait-and-see attitude, further hinders an effective resolution. Thus, there has been a call for solutions to enhance clarity, accessibility, and comprehensiveness around required measures to support accountability.

By founding our framework on ethics principles applied at each stage of development and use, we aim to operationalize our conceptualization of accountability as a holistic and integrated responsibility. Taking a lifecycle view that systematically enforces ethical norms from ideation through all subsequent phases, our framework aims to make accountability an integrated part of standard organizational processes for trustworthy AI. Therefore, the framework is adaptable to the use case of the developer and provider, as it comprehensively lays out the steps to take. Finally, this lifecycle approach involves implementing ethics best practices from the initial design stage through routine use. A vital component is establishing mechanisms to measure [17] and monitor adherence to these principles at each lifecycle stage, from ideation to post-market implementation.

In summary, our framework can support solving the issue of defining accountability for an AI system's actions. It fosters clarity by offering an overview of required measures and at the same time, these more concrete measures can define accountabilities, as concrete tasks can be attributed to roles in the system development. The modular structure and the step-by-step breakdown of actions within our framework facilitate accessibility and practical implementation. In addition, the comprehensive nature of the framework enables system-independent adaptability, which makes the framework versatile and allows for broader adaptation to various use cases.

To further adapt these solutions to practical needs, join the CONSULTATION and provide valuable feedback!

References

- Cambridge Dictionary. (2022, May 11). Accountability. https://dictionary.cambridge.org/dictionary/english/accountability?q=Accountability

- Stix, C., (2021). Actionable principles for artificial intelligence policy: Three pathways. Science and Engineering Ethics. 27(15), 1-15.

- Tekathen, M., and Dechow, N. (2013). Enterprise risk management and continuous re-alignment in the pursuit of accountability: a German case. Manag. Account. Res. 24, 100–121. doi: 10.1016/j.mar.2013.04.005

- Regulation 2021/0106. Proposal for a Regulation of the European Parliament and of the Council: Laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain Union legislative acts. https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A52021PC0206

- White House Office of Science and Technology Policy (OSTP). (2022). Blueprint for an AI Bill of Rights – Making Automated Systems work for the American People. Washington: White House Retrieved from https://www.whitehouse.gov/ostp/ai-bill-of-rights/

- Loi, M., & Spielkamp, M. (2021, July). Towards accountability in the use of artificial intelligence for public administrations. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society (pp. 757-766).

- UNESCO. (2021, November). Recommendation on the Ethics of Artificial Intelligence. https://unesdoc.unesco.org/ark:/48223/pf0000381137

- High-Level Expert Group on Artificial Intelligence (AI HLEG) (2019). Ethics Guidelines for Trustworthy AI. Brussels: European Commission. Available at: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai

- European Council. (2020, August). Special meeting of the European Council – Conclusions (EUCO 13/20). https://www.consilium.europa.eu/media/45910/021020-euco-final-conclusions.pdf

- OECD. (2021). Tools for trustworthy AI: A framework to compare implementation tools for trustworthy AI systems. Documents de travail de l'OCDE sur l'économie numérique, n° 312, Éditions OCDE, Paris, https://doi.org/10.1787/008232ec-en

- De Agência Senado (2022). Comissão de juristas aprova texto com regras para inteligência artificial. Senado Federal. https://www12.senado.leg.br/noticias/materias/2022/12/01/comissao-de-juristas-aprova-texto-com-regras-para-inteligencia-artificial

- Japan METI (2021). ‘Governance Guidelines for AI Principles in Practice Ver. 1.1' compiled (METI/Ministry of Economy, Trade and Industry). Available at: https://www.meti.go.jp/press/2021/01/20220125001/20220124003.html

- German AI Association. (2023). Towards the finish line: Key issues and proposals for the trilogue. German AI Association. https://ki-verband.de/wp-content/uploads/2023/07/Position-Paper_AI-Act-Trilogue_GermanAIAssociation.pdf

- Australian Government, (2019). Australia’s Ethics Framework. A Discussion Paper. Department of Industry, Innovation and Science. https://www.csiro.au/en/research/technology-space/ai/ai-ethics-framework

- IEEE. (2019). Ethically aligned design. IEEE. https://standards.ieee.org/wp-content/uploads/import/documents/other/ead_v2.pdf

- Hohma, E., Boch, A., Trauth, R., & Lütge, C. (2023). Investigating accountability for Artificial Intelligence through risk governance: A workshop-based exploratory study. Frontiers in Psychology, 14, 1073686.

- Mantelero, A. (2020). Elaboration of the feasibility study. Council of Europe.

- Zaloguj się, aby zamieszczać komentarze